A while back, I was playing around with a bit of 8086 assembly. I’m not much of a programmer, but I’ve done some C{,++}, Python, PHP (sorry) and bits of a few others in the past. All of these are quite high level though, and I felt like going back to basics a bit. I chose to start with MS-DOS, since I thought (perhaps incorrectly?) that it would be easier to program. And I figured it would probably be a nice bit of computing history to learn anyway.

I soon moved on from MS-DOS and decided to set myself a programming challenge of a bare-metal Mandelbrot generator. In this post, I’ll give a bit of background about the Mandelbrot set, and explain my code.

MS-DOS

I started off with this MS-DOS assembly tutorial. It seems to be broadly unchanged since it was first crawled by the Wayback Machine in 2002. Nice. I chose to use NASM instead of A86 but otherwise the tutorial’s pretty good. If you’re lazy, here’s a virtualbox image, with MSDOS 6.22 and NASM installed. My code is all in C:\PROGRAMM, in case anyone wants to use it as a reference.

This was fun for a while, but eventually I got bored of DOS programming and decided to try some bare metal stuff – and somehow, perhaps vaguely influenced by demoscene style demos, I ended up setting myself the challenge of writing a bare-metal Mandelbrot generator within a 512-byte bootsector.

The Mandelbrot Set

The Mandelbrot Set is the set of points, c, on the complex plane such that the sequence zn+1=zn2+c (where z0=0) remains bounded. To clarify, here’s an example:

- Let’s check if the point (-0.5+0.5j) is in the set. Set c=(-0.5+0.5j)

- Now let’s perform the first iteration. (-0.5+0.5j)²+(-0.5+0.5j)=-0.5

- And another iteration: (-0.5)²+(-0.5+0.5j)=-0.25+0.5j

- And another: (0.25+0.5j)²+(-0.5+0.5j)=-0.6875+0.25j

- We can keep on iterating again and again like this, and we find that the numbers stay small. Eventually, we might conclude that the sequence is bounded, and the point is therefore in the set.

In fact, if we plot each iteration, we can see that it’s going around in a strange, almost spirograph-like shape:

But overall, the general trend seems to be going around in a closed circle, rather than shooting off to infinity, so it would probably be fairly safe to say here that the sequence is bounded. If you’re not convinced, here’s a plot of the magnitude at each iteration:

To take another example, let’s try it with the point (0.5,0.5). The iterations go:

- 0.5+1j

- -0.25+1.5j

- -1.69-0.25j

- 3.28+1.34j

- …

- 990294279-77569977j

So, this looks like it’s shooting off to infinity… and fast.

But this raises an interesting question: at what point do we decide whether the sequence is or isn’t bounded? In general we’d have to continue iterating to infinity to know for sure. Perhaps we should set a threshold, beyond which we declare it to be unbounded? But where should it be? And how do we know that sequences which exceed that threshold aren’t coming back?

Well, as it turns out, it can be proven (see A6d) that if any point leaves a circle of radius 2, (ie |zn|>2), it will never return. So a reasonable approach is to compute a fixed number of iterations, and then check whether the result is less than 2 in absolute value.

Here are two simple implementations I wrote just to demonstrate the idea. First, this is for the C++-minded people:

#include <iostream>

#include <complex>

using namespace std;

int main(){

cout << "P1 800 800 ";

for(int i=0;i<800;i++){

for(int j=0;j<800;j++){

complex<float> c((j-400)/200.0,(i-400)/200.0); //scale the indicies to floats in the range -2..2

complex<float> z(0,0); // initialise z to 0

for (int iter=0;iter<64;iter++) //iterate 64 times

z=z*z+c;

cout << (abs(z)<2)?"1 ":"0 "; //output a black pixel if in the set, white otherwise

}

}

cout<<endl;

return 0;

}

This goes through each pixel one-by-one and for each one runs 64 iterations. The output is a PBM file to stdout, so you’ll have to pipe it and open it in a compatible viewer (GIMP works) or convert it to a more common format.

Or, if you’re more of a MATLAB fan:

c=ones(800,1)*linspace(-2,2,800);

c=complex(c,c');

z=zeros(800,800);

for i=1:50

z=z.^2+c;

end

imshow(abs(z)<2)

The same idea as the C++ example, but using vectorisation rather than iterating through pixels, and using MATLAB’s internal imshow command to display the image.

The Bare-Metal Version

With all that setting the scene done, let’s get down to how I wrote an 88-byte 8086 bare-metal mandelbrot generator.

A note on arithmetic

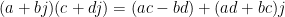

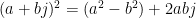

The general structure of the C++ example can be carried over, but the arithmetic gets difficult. Firstly, the complex number datatype in C++ is an abstraction. The processor doesn’t understand complex numbers (not an 8086 at least). So we’ll have to treat them as two reals. Addition and subtraction are trivial. For multiplication we just have to remember that:

and specifically:

This is conceptually not too difficult, but it does mean that complex operations take up a lot of program space.

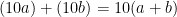

The second thing to consider is that floating point operations don’t exist in 16-bit intel. We’re going to have to work in integer instructions instead. With a little bit of trickery though, we can create a very simple fixed-point system. Essentially, we just represent every number as a fixed multiple of its true value. Say, this could be 10. Addition (and by extension, subtraction) is consistent:

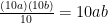

Multiplication works too, with the caveat that we have to divide by the scale factor after:

It’s for this reason that it’s best for that multiple to be a power of two: this reduces the division to a right shift.

For example, here is how we might multiply two numbers with a scaling of 1/32:

mov al,73 ; move the number 2.28 (73/32) into al

mov bl,-193 ;move -6.03 (-193/32) into bl

imul bl ; multiply bl (implicitly, with al). stores result in ax.

sar ax,5 ; shift right by 5 places to divide by 2^5=32

Note the use of sar rather than shr to preserve the sign.

The code

So, without further ado, let’s start deconstructing my code, a section at a time:

[bits 16]

[org 7c00h]

mov ax,0fh

int 10h

First a bit of initialisation – instructions to the assembler that the code should be 16-bit, and will be loaded into memory at location 0x7c00.

Then, we call BIOS interrupt 10h with AH=0 (change video mode) and AL=0fh (640×350 mono resolution).

This video mode will fit a nice big 512×256 canvas on the screen.

Total assembled size so far: 5 bytes

mov dx,-128 ;start on the first line

yloop:

mov di,-256 ;go to the beginning of the line

xloop:

;set z=c initially - start on iteration 1

mov si,di

mov bp,dx

xor ax,ax ; set colour to black (al=0) and iteration counter to zero (ah=0)

So, here we initialise the two variables for our c value. DX will contain the y value and DI the x value. I’m starting at y=-1 and x=-2, using a scaling factor of 1/128. My canvas is to be y from -1 to 1 and x from -2 to 2. The z variable is stored with its real part in SI and its imaginary part in BP. We skip out the zeroth iteration here by initialising z=c.

Later, the interrupt to write a pixel to the screen will read the pixel value from AL. This wants to be initialised to zero (black). If the pixel is in the set, this will be changed to one (white) later. Otherwise it will be unchanged.

At the same time, I want to set an iteration counter to zero. Sneakily, I’ve chosen to use the other byte of AX for this, so that I can set both the pixel value and the iteration counter to zero in a single instruction. This single xor saves two bytes vs two 8-bit literal moves.

This block: 12 bytes, So far: 17 bytes.

iterate:

;calculate Im(z^2)=2xy

mov cx,bp

imul cx,si

jo overflow

sar cx,6 ;maybe mov 6 into a register first?

;cx contains Im(z^2)

At each iteration step we need to calculate  . To do this we need to calculate the real and imaginary parts of

. To do this we need to calculate the real and imaginary parts of  , then add these to the real and imaginary parts of c.

, then add these to the real and imaginary parts of c.

As the comment suggests, here we’re calculating  , which as I showed previously is

, which as I showed previously is  . We copy

. We copy  into CX, since we’ll need it again later and we don’t want to clobber it. Then we multiply this by SI (

into CX, since we’ll need it again later and we don’t want to clobber it. Then we multiply this by SI ( ) and store the result in CX. The value in this register should now be

) and store the result in CX. The value in this register should now be  (note what I said about fixed-point multiplication earlier). Ignoring the conditional jump for now, we would then want to divide by 128 to get

(note what I said about fixed-point multiplication earlier). Ignoring the conditional jump for now, we would then want to divide by 128 to get  . Except what we want is actually

. Except what we want is actually  , so we can save ourselves a multiplication by just dividing by 64 instead (right shift by 6).

, so we can save ourselves a multiplication by just dividing by 64 instead (right shift by 6).

Now, back to the jump. This is how we catch the sequences which go off to infinity – we’ll see where it goes later. In the C++ example, we used abs(z) to check whether we were inside an r=2 circle. But calculating an abs value is expensive. It requires us to square two numbers and sum them. But consider this: any point which leaves the r=2 circle will eventually go off to infinity. If I wanted to I could use an r=3 circle, or an r=1,000,000 circle – it would just potentially take more iterations. Any region which completely contains the r=2 circle will do the job. So how about a rectangle?

My jump is conditional on  being greater than 32768/128 in magnitude (the signed 16-bit max, after scaling). That is,

being greater than 32768/128 in magnitude (the signed 16-bit max, after scaling). That is,

So, provided that |Im(c)|<6 - which it always will be since we're only working with y in the range of -1 to 1 - this will contain the whole of the r=2 circle. Perfect.

This block: 10 bytes, So far: 27 bytes

[code];calculate Re(z^2)=x^2-y^2

mov bx,si ;we will work in the BX register for now

add bx,bp ;bx contains x+y

sub si,bp ;si contains x-y

imul si,bx

jo overflow

sar si,7 ;si contains Re(z^2)[/code]

And now to calculate

And now to calculate  . As noted previously, this is given by

. As noted previously, this is given by  . Earlier versions of my code calculated exactly this – multiplying each part by itself, then subtracting one from the other. Then I realised I was missing a trick here – I could factorise

. Earlier versions of my code calculated exactly this – multiplying each part by itself, then subtracting one from the other. Then I realised I was missing a trick here – I could factorise  , thus gaining an ADD (+2 bytes), but losing an IMUL (-3 bytes). A saving of 1 byte – score!

, thus gaining an ADD (+2 bytes), but losing an IMUL (-3 bytes). A saving of 1 byte – score!

After we’ve calculated  with the add bx,bp, we no longer need

with the add bx,bp, we no longer need  (SI), so we save ourselves an MOV by clobbering it in the SUB instruction.

(SI), so we save ourselves an MOV by clobbering it in the SUB instruction.

Again, we check for overflow and SAR 7 to take out the factor of 128. Here, the overflow checks if  , ie

, ie  . Over the range of |Re(c)|<2 - exactly the space we're working in - this completely contains the r=2 circle. In fact, at the far left and right edges of the canvas, they just touch. An illustration is shown to the right: including the [latex]abs(z^2)<2[/latex] criterion in grey and the rectangular criterion we're using here in blue. The rectangle moves around relative to the axes depending on the point being calculated.

This block: 14 bytes, So far: 41 bytes

[code];calculate z'=z^2+c

add si,di

add cx,dx

mov bp,cx

;do another iteration

inc ah

jno iterate[/code]

Here are the final steps to calculate [latex]z_{n+1}[/latex]. We then increment the iteration counter, AH, and unless it overflows (passes 255), we go round again. Whee! 255 iterations is thoroughly excessive, but it allows us to just check overflow, rather than doing a CMP with an immediate value, which would cost 3 bytes.

This block: 10 bytes, So far: 51 bytes

[code];iterations are over

inc al ; if we've gotten all this way, set the colour to white

overflow:[/code]

If we get here just by the natural progression of the program, that will be because we went through 255 iterations without overflowing. Therefore the pixel is in the set, so we set it to white - a value of 1. Since I know its value before is zero, I can use an INC (2 bytes) instead of a MOV immediate (3 bytes).

If we get here via one of the jump on overflows, we skip out of this instruction and the pixel remains black.

This block: 2 bytes, So far: 53 bytes

[code];now write a pixel

mov ah,0ch ; write pixel interrupt

xor bh,bh

mov cx,di

add cx,320

add dx,175

int 10h

sub dx,175[/code]

Here we use another INT 10h interrupt to write the pixel to the screen. We set AH=0Ch for the "write a pixel" command, set BH=0 for page zero (using XOR- no size advantage over MOV immediate here, but faster), and stick the coordinates in CX and DX (in fact, I chose to use DX to store the y coordinate anyway, to save a MOV instruction here). Since our coordinate system was centred around zero, we add 320 and 175 to the x and y coordinates respectively, to centre the image on the screen. After the interrupt, we have to take the 175 back off DX, since it serves as our loop counter so we can't clobber it.

All of these ADD and SUB immediates are VERY expensive instructions - each one takes 4 bytes. I haven't thought of a way to optimise this though.

This block: 20 bytes, So far: 73 bytes

[code];loop around, do the next pixel

inc di

cmp di,255

jne xloop

;or if we've gotten to here, draw the next row

inc dx

cmp dx,127

jne yloop[/code]

And some loop logic. We go onto the next pixel in the line (inc DI), and loop around unless the pixel value's 255 (end of the line). This CMP literal is expensive (4 bytes) but not easily avoided - since it's a signed type (and we're only using the lower 9 bits anyway), we can't just test for overflow. Outside of this, we have the same structure to loop through lines.

This block: 13 bytes, So far: 86 bytes

[code]cli

hlt

db $-$$ ;tell us the length of the code

times 510 - ($ - $$) db 0

dw 0xAA55[/code]

And finally a little bit of boilerplate: clear BIOS interrupts and halt. The db $-$$ line is a little hack to make it easier to see the size of the program - it just inserts a byte of value (this memory location-memory location of program start). Then I just look at the code in xxd and the last byte before the long run of zeros is the size of the program.

It needs to be padded up to 512 bytes (the size of a boot sector), with the magic word AA55h at the end to indicate that it's bootable.

And so that's a final 2 bytes of executable code (I'm not counting the padding, of course), bringing us up to 88 bytes!

The complete code is here: mandelbrot-bootsector.asm

. Over the range of |Re(c)|<2 - exactly the space we're working in - this completely contains the r=2 circle. In fact, at the far left and right edges of the canvas, they just touch. An illustration is shown to the right: including the [latex]abs(z^2)<2[/latex] criterion in grey and the rectangular criterion we're using here in blue. The rectangle moves around relative to the axes depending on the point being calculated.

This block: 14 bytes, So far: 41 bytes

[code];calculate z'=z^2+c

add si,di

add cx,dx

mov bp,cx

;do another iteration

inc ah

jno iterate[/code]

Here are the final steps to calculate [latex]z_{n+1}[/latex]. We then increment the iteration counter, AH, and unless it overflows (passes 255), we go round again. Whee! 255 iterations is thoroughly excessive, but it allows us to just check overflow, rather than doing a CMP with an immediate value, which would cost 3 bytes.

This block: 10 bytes, So far: 51 bytes

[code];iterations are over

inc al ; if we've gotten all this way, set the colour to white

overflow:[/code]

If we get here just by the natural progression of the program, that will be because we went through 255 iterations without overflowing. Therefore the pixel is in the set, so we set it to white - a value of 1. Since I know its value before is zero, I can use an INC (2 bytes) instead of a MOV immediate (3 bytes).

If we get here via one of the jump on overflows, we skip out of this instruction and the pixel remains black.

This block: 2 bytes, So far: 53 bytes

[code];now write a pixel

mov ah,0ch ; write pixel interrupt

xor bh,bh

mov cx,di

add cx,320

add dx,175

int 10h

sub dx,175[/code]

Here we use another INT 10h interrupt to write the pixel to the screen. We set AH=0Ch for the "write a pixel" command, set BH=0 for page zero (using XOR- no size advantage over MOV immediate here, but faster), and stick the coordinates in CX and DX (in fact, I chose to use DX to store the y coordinate anyway, to save a MOV instruction here). Since our coordinate system was centred around zero, we add 320 and 175 to the x and y coordinates respectively, to centre the image on the screen. After the interrupt, we have to take the 175 back off DX, since it serves as our loop counter so we can't clobber it.

All of these ADD and SUB immediates are VERY expensive instructions - each one takes 4 bytes. I haven't thought of a way to optimise this though.

This block: 20 bytes, So far: 73 bytes

[code];loop around, do the next pixel

inc di

cmp di,255

jne xloop

;or if we've gotten to here, draw the next row

inc dx

cmp dx,127

jne yloop[/code]

And some loop logic. We go onto the next pixel in the line (inc DI), and loop around unless the pixel value's 255 (end of the line). This CMP literal is expensive (4 bytes) but not easily avoided - since it's a signed type (and we're only using the lower 9 bits anyway), we can't just test for overflow. Outside of this, we have the same structure to loop through lines.

This block: 13 bytes, So far: 86 bytes

[code]cli

hlt

db $-$$ ;tell us the length of the code

times 510 - ($ - $$) db 0

dw 0xAA55[/code]

And finally a little bit of boilerplate: clear BIOS interrupts and halt. The db $-$$ line is a little hack to make it easier to see the size of the program - it just inserts a byte of value (this memory location-memory location of program start). Then I just look at the code in xxd and the last byte before the long run of zeros is the size of the program.

It needs to be padded up to 512 bytes (the size of a boot sector), with the magic word AA55h at the end to indicate that it's bootable.

And so that's a final 2 bytes of executable code (I'm not counting the padding, of course), bringing us up to 88 bytes!

The complete code is here: mandelbrot-bootsector.asm

The result

The code is compiled with nasm:

$ nasm -o mandelbrot mandelbrot-bootsector.asm

And run in qemu:

$ qemu-system-i386 -video cirrus -fda mandelbrot -net none

Here’s a screenshot and a video of it running:

Possible Optimisations

I’ve no doubt this code could be made smaller by someone more skilled than I am. I can see a few areas for potential optimisation, but can’t think how to implement them without breaking other things. For example, when writing the pixels to the screen, there are 3 ADD/SUB immediate instructions. These take up 4 bytes each – if I could have the immediate values in a register instead, that would save two bytes per instruction. But alas, I’m already scraping the bottom of the barrel for registers (I tried using SP, but since BIOS interrupts apparently use the stack, that breaks things).

Similarly, the CMP literals for the loop logic are pretty expensive. Avoiding literals is always desirable.

Perhaps it’s possible to save operations by foregoing the BIOS interrupt to write pixels and just write to video memory instead? I haven’t looked into this at all.

Dissolve Animation

I made another version of my code which makes the set “dissolve” in. Essentially it uses an LFSR to cycle through pixel values, rather than a loop. I think it gives a pretty cool visual effect. I was going to write more on it, but this post is getting long enough already, so I’ll just leave the code and a video here if anyone’s interested. It’s not as heavily optimised as the code above.

mandelbrot2-ver4.asm

Conclusion

This has turned out to be a very long blog post (my word count is saying over 2,700 words) but hey, I guess I had a lot to say. I had great fun learning assembly with this programming challenge, and I hope you’ve found my explanation interesting interesting too. If you can optimise it any more, I’d be interested to see your results, so do let me know in the comments!